We believe emotion AI has the potential to unlock human potential and improve everything from learning to health care. We believe in enhancing human capability, not replacing it.

What we do is only strengthened by having safety and ethics at our core. This is applied at every stage of building our software.

We believe that understanding human emotions through technology is essential for creating experiences that truly resonate with users and enhance human health and performance. However, interpreting non-verbal behaviour presents certain risks, such as privacy concerns, manipulation, or biased assessments.

That’s why all commercial applications powered by our APIs are held to the highest ethical standards.

Emotional data is protected, with strict controls over its use and retention, ensuring that consent is always obtained.

Any use of our technology that violates laws or encourages illegal activities is prohibited. Harmful uses such as generating discriminatory, harassing, or deceptive content is also prohibited. This ensures that the AI is not used in ways that might manipulate or harm individuals emotionally.

Our technology is applied ethically, promoting empathy and positive experiences, while avoiding misuse or harmful outcomes.

By integrating these safeguards, we ensure that our technology enhances human performance and well-being responsibly and transparently.

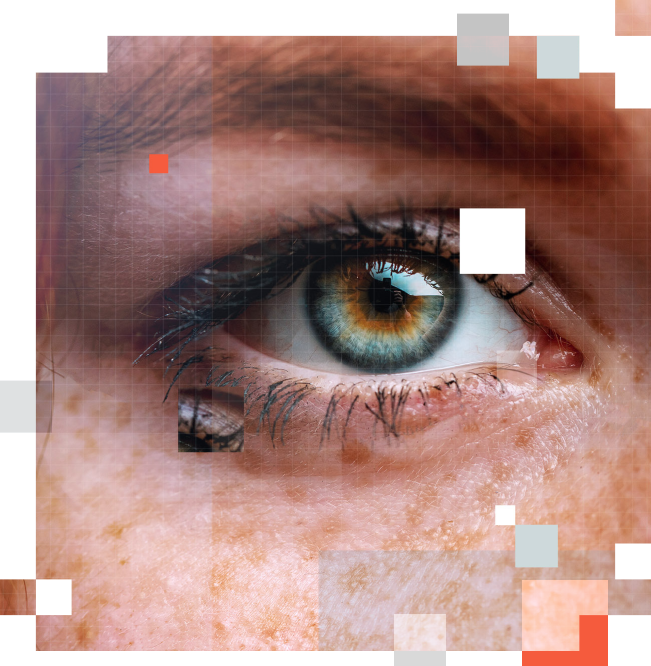

The eyes provide richer data and deeper insights than other data types like facial recognition or heart rate variation. It is also easier to unobtrusively capture during a task.

Eye movement data also has the critical feature of being non-sensitive for the user. We do not capture images of your face, or store images of your eyes, instead we store data that simply tells us where your eyes are pointing, and what size your pupil is. This means it is impossible to connect this data back directly to any-one person. Once this data is anonymised, there is no way of knowing who it belongs to. Eye tracking provides insights into how a person might be thinking or feeling in a given moment but without the need to store any sensitive data that easily identifies that person. This enables us to provide personalised and optimised experiences without undue risks around privacy and security.

We are committed to providing clear and concise information about how we collect, process and protect data within our company.

We adhere to all applicable laws and regulations governing data protection, including but not limited to the General Data Protection Regulation (GDPR) and the Data Protection Act 2018.

We collect and process data only for specified, explicit, and legitimate purposes, ensuring that it is not further processed in a manner incompatible with those purposes.

We collect and retain only the minimum amount of data necessary for the purposes for which it is processed.

We maintain the integrity, security, and confidentiality of data by implementing appropriate technical and organisational measures to prevent unauthorised access, disclosure, alteration, or destruction.

While AI models can inherently carry the risk of bias due to the datasets they are trained on, even when efforts are made to include a diverse range of user groups, our approach minimises this issue through personalisation. When a new user first interacts with the system, the model undergoes a rapid re-training period.

In the first few minutes of use, the model tailors itself in real-time based on the individual’s specific data, ensuring that any inherent biases are removed and the emotional or cognitive state estimation becomes personalised and precise for that person. This allows for more accurate, bias-free estimations tailored to each user’s unique profile.